Regex 101

There are two main reasons you might want to use “regular expressions” (regex):

- You’re writing code and want to replace something throughout your code that matches a predictable pattern (say, every time you use

my_data_[some numbers]_[more numbers]you want instead to seesample_[first group of numbers]_subject_[second group of numbers]). This goes beyond the typical “find and replace” you would use in Word or other programs, because you want to change multiple values, likemy_data_123_456andmy_data_094_241. - You’re analyzing some text values and need to change them or clean them up. Say you want to split first name from last name, and you presume that the last word in the name phrase is the last name, but you don’t know if the person has one or two first names: “Mary Alice Donnegan”, “Akil Bonner”. Or maybe you want to match every phrase that has “seps” or “sept” in it, regardless of capitalization or the other letters, and replace it with “sepsis”. So “acute septic shock” and “sepsis, unknown origin” would match and be replaced.

Regular expressions are tricky to learn but if you work with strings (character data) frequently, whether that’s from free-text selections in a REDCap database or a problem list from Epic, the learning curve is definitely worth it!

The idea of regular expressions is that characters can be described in a few ways:

- What kind of character is it? A digit, 0-9? That’s

\d. Any character at all? That’s.. A whitespace character like space or tab? That’s\s. The character “u”?u. The upper-case letters only?[A-Z] - How many characters of the same kind are in a row? Zero or more? That’s

*. At least one, maybe more? That’s+. One to three, but not less than one or more than three? That’s{1,3}. - Are some characters to be avoided? E.g. you’re searching for 1 or more numbers followed by something that’s not a letter:

\d+[^A-Za-z] - Do some characters need to be saved or pulled out of a string? For example, do I want to pull out just the first number sequence in every phrase that looks like

subject_[numbers]_studylocation_[more numbers]?

Ye Olde Regex Checker

The absolute best way to learn about regex is to use a “regex checker”. There are several good ones online. Let’s check out, for example, https://regex101.com/ in this ~15 minute video:

Once you’ve practiced with some data (not PHI please!) in an online regex checker, and honed your regular expression, you’re ready to implement it into code. Let’s take a look in both R and Python how to clean up a field using regular expressions.

Programming and Regex

Our scenario is that we have asked our research assistants to enter subject identifiers as “subject”, an underscore, the subject number (decided by each participating lab), then “location” and the participating lab identifier. Unfortunately, not everyone entered the data the same way. Here’s an example of some of our data:

| Subject Identifier |

|---|

| subj_101_location_042 |

| subject_102_location_042 |

| subj.105_location_042 |

| subj_110_locattion_042 |

| subject_111_loc_042 |

| sub-012-location-055 |

| Subject_015_loc-055 |

| SUBKECT_017_LOCATION_055 |

| subj_025_location_055 |

And this is how we’d like it to look:

| Subject Identifier |

|---|

| s_101_l_042 |

| s_102_l_042 |

| s_105_l_042 |

| s_110_l_042 |

| s_111_l_042 |

| s_012_l_055 |

| s_015_l_055 |

| s_017_l_055 |

| s_025_l_055 |

Optional: want to see how I quickly changed the data from the “current” to “desired” state using Find/Replace in the Sublime Text 3 text editor? See here:

R

Let’s create some “bad” data in R and then transform it! You can read on below or try out the code yourself.

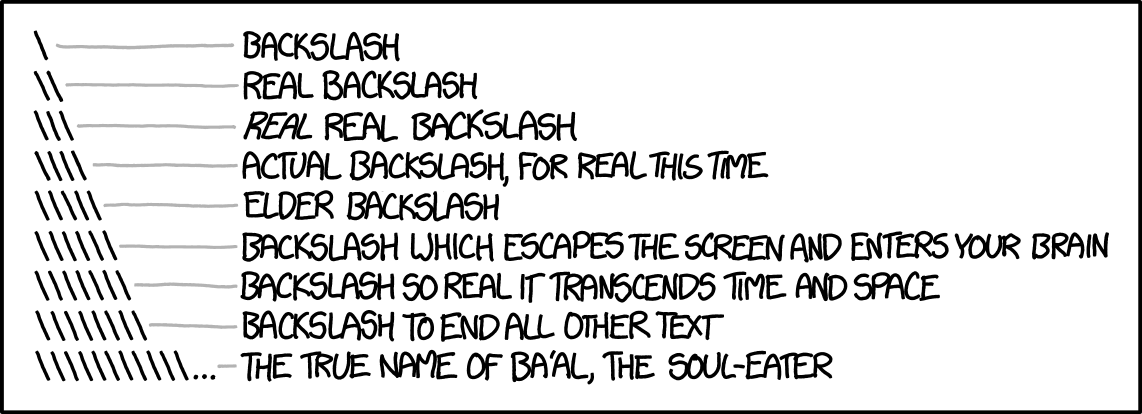

Note that R suffers from the “need for extra backslashes”. This is the XKCD take on the backslash conundrum (used with permission)1:

# Bad data!

subjects <- c("subj_101_location_042",

"subject_102_location_042",

"subj.105_location_042",

"subj_110_locattion_042",

"subject_111_loc_042",

"sub-012-location-055",

"Subject_015_loc-055",

"SUBKECT_017_LOCATION_055",

"subj_025_location_055")

# Extract our "capture groups".

# Note the "extra" backslashes we have to use to indicate

# literal uses of special characters as well as use the `\d` character!

regex_pattern <- "sub[a-zA-Z]*[\\.\\-_](\\d+)[\\.\\-_]loc[a-zA-Z]*[\\.\\-_](\\d+)"

# note -- this is case sensitive, so "Sub" or "SUB" wouldn't match.

# We handle this by forcing our strings to be all lower case before

# we evaluate them!

# Load the package we need

library(stringr)

str_match(tolower(subjects), regex_pattern)When we issue the str_match command, it prints its results to the console, which allows us a sanity check. Does this look right?

> str_match(tolower(subjects), regex_pattern)

[,1] [,2] [,3]

[1,] "subj_101_location_042" "101" "042"

[2,] "subject_102_location_042" "102" "042"

[3,] "subj.105_location_042" "105" "042"

[4,] "subj_110_locattion_042" "110" "042"

[5,] "subject_111_loc_042" "111" "042"

[6,] "sub-012-location-055" "012" "055"

[7,] "subject_015_loc-055" "015" "055"

[8,] "subkect_017_location_055" "017" "055"

[9,] "subj_025_location_055" "025" "055"Yep, that looks good. That means we can finish up with:

# Looks good, so we can use str_match to create our new naming convention.

# We "paste together", with no separator (sep = ""), the strings we want.

new_subjects <- paste("s_",

str_match(tolower(subjects), regex_pattern)[,2],

"_l_",

str_match(tolower(subjects), regex_pattern)[,3],

sep="")

new_subjectsWhat does new_subjects look like?

> new_subjects

[1] "s_101_l_042" "s_102_l_042" "s_105_l_042" "s_110_l_042" "s_111_l_042"

[6] "s_012_l_055" "s_015_l_055" "s_017_l_055" "s_025_l_055"Python

Let’s do the same thing in python – create some bad data and then use regex to find and fix the problems / inconsistencies. You can read on below or try out the code yourself.

# Bad data!

subjects = ["subj_101_location_042",

"subject_102_location_042",

"subj.105_location_042",

"subj_110_locattion_042",

"subject_111_loc_042",

"sub-012-location-055",

"Subject_015_loc-055",

"SUBKECT_017_LOCATION_055",

"subj_025_location_055"]

# import the "regular expressions" or re module

import re

# Extract our "capture groups".

regex_pattern = "sub[a-zA-Z]*[\.\-_](\d+)[\.\-_]loc[a-zA-Z]*[\.\-_](\d+)"

# We use "list comprehension"

# (aka "hey python, make a list made up of the things I'm about to tell you")

# to create a list of matches.

results = [re.search(regex_pattern, string, re.IGNORECASE) for string in subjects]

# Another list comprehension. Does it look right?

[result.groups() for result in results]Let’s check out results:

>>> [result.groups() for result in results]

[('101', '042'), ('102', '042'), ('105', '042'), ('110', '042'),

('111', '042'), ('012', '055'), ('015', '055'), ('017', '055'),

('025', '055')]OK! Looks good, so we can keep going…

# Yep, it sure looks good to me! Create a list of output.

["s_" + result.group(1) + "_l_" + result.group(2) for result in results]What’s the result of that?

>>> ["s_" + result.group(1) + "_l_" + result.group(2) for result in results]

['s_101_l_042', 's_102_l_042', 's_105_l_042', 's_110_l_042',

's_111_l_042', 's_012_l_055', 's_015_l_055', 's_017_l_055',

's_025_l_055']-

Creative Commons Attribution-NonCommercial 2.5 License. ↩