Distributed Humaning

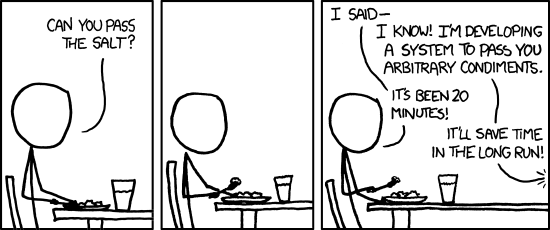

Not long ago I oversaw the building of a Selenium bot to handle some repetitive clicking required in a massive update to user ids in a REDCap database as well as discouraging someone else from pursuing a computational approach at all. Let’s consider the wisdom of one of the experts I often consult for thorny issues, XKCD (used with permission)1:

When I approach a problem as a programmer, I operate by the “DRY” principle – don’t repeat yourself. I understand the temptation to develop a system to “pass arbitrary condiments.” It really can save time in the long term. Maybe. It also gives a sense of accomplishment and often new learning of a content area to the programmer. On the other hand, sometimes humans are the cheapest and most effective solution to a problem that could be solved (with greater expense and time) by a computer. As a project manager and someone who keeps her eye on budget numbers and flexibility, I appreciate that sometimes coding something isn’t the answer.

Consider the rise of the “citizen scientist”. Instead of “distributed computing” – remember over ten years ago when scientists recruited people to sign up their personal computers to help work on protein folding? – a recent planetary discovery program relies on “distributed humaning” (my term) or crowdsourcing signal detection.

A few months ago I had a conversation with someone who wanted to do deep learning on texts to extract content and meaning to find (and possibly) construct meaningful questions from a complex text. We talked about machine learning and natural language processing, and then I asked, “Have you considered mturk?” Amazon’s Mechanical Turk is cheekily named after an 18th century robotic hoax, and allows requestors to have real humans do things like identify hard-to-read text, summarize paragraphs, give opinions, and so forth. While human-driven prototypes are often the first proof of concept in a possible computational application, it’s also more than reasonable to consider using crowdsourcing as a legitimate source of data, instead of a temporary fix until a computational algorithm can be developed. It’s all about finding the right tool for the job!

Although I’m all about scripting for reproducibility in science, sometimes software development isn’t the way to go.

On the other hand, consider an assignment I gave a work study employee in a research lab I did some work for recently. Without getting into the whys and hows, we had to change multiple REDCap records in the same way, and a mass download/update/upload mechanism just wouldn’t work. I suggested that while the time gain might be minimal, if any, it was worthwhile to develop a Python script that would use Selenium, and perhaps a TCL library, to open a browser and execute the work following the rules she coded. Why, in this case, did I suggest automating something, even though the labor costs for having a human do this (work study employees aren’t pricey) might suggest a human approach?

Here, in no particular order, were my criteria:

- This student wants to be a data scientist. She needs to develop chops in Python. This project would effectively address two end goals.

- The problem is well-understood and can be stated in program logic fairly easily.

- There is no special human understanding required (language processing, facial recognition, etc.) that she would do much better at than a computer

- I want her to understand the mechanism we’re trying to reproduce, and having to code it means understanding it well.

- We might have similar needs in the future, and having her code a solution now will save me time and money later.

In short, whether brute force or computational elegance is the better solution is almost always contextual.

-

Creative Commons Attribution-NonCommercial 2.5 License. ↩